Welcome to “The Facebook and Twitter Scraper Guide”! In this ultimate guide, we will delve deep into the world of social media scraping. We will focus specifically on two of the most popular platforms: Twitter and Facebook.

We will explore the legal aspects of social media scraping, how to safely access and retrieve data via APIs, and a step-by-step tutorial on how to scrape Facebook or Twitter using Python. Additionally, we’ll present our top recommended tools for social media scraping and conclude with essential best practices to ensure efficient, ethical, and compliant data collection.

Let’s dive right in!

Disclaimer: This material has been developed strictly for informational purposes. It does not constitute endorsement of any activities (including illegal activities), products or services. You are solely responsible for complying with the applicable laws, including intellectual property laws, when using our services or relying on any information herein. We do not accept any liability for damage arising from the use of our services or information contained herein in any manner whatsoever, except where explicitly required by law.

Table of Contents:

- Social Media Scraping: What it is?

- Is Social Media Scraping Legal?

- Accessing Twitter and Facebook Data Safely Via Their APIs.

- How to Scrape Facebook (or Twitter) with Python?

- The Top 4 Recommended Social Media Scraping Tools.

- Best Practices for Twitter and Facebook Scraping

- Final Words.

1. Social Media Scraping: What it is?

A social scraper tool should be able to extract data from various social media platforms, including Facebook, Twitter, Instagram, LinkedIn, TikTok, blogs, wikis, and news sites. All of these social platforms have something in common: “They generate user-generated content as unstructured data that is accessible through the web.”

Data scraped from social media can provide an extensive and dynamic dataset about human behavior. It can offer valuable insights to social scientists and business experts so they can understand individuals, groups, and an entire society. Social media scraping and analytics have been used by various industries (like retail and finance) to enhance brand awareness, customer service, marketing strategies, and even detect fraud.

But beyond these applications, modern social media datasets can be used in the following areas:

- Customer sentiment measurement: Analyzing customer reviews from social media like Twitter and Facebook can help businesses understand how customers feel about their products or services. This information can be used to improve customer satisfaction and loyalty.

- Market trend identification: An automatic social media scraper like Facebook or Twitter scraper can be used to identify market trends. Scrapers can be used for tracking industry data, influencers, and publications across time.

- Online branding monitoring: Twitter scrapers or any other social media scraper can be also used to monitor online branding by tracking customer feedback. It can also be used to track competitor activity and industry trends. With this information at hand, businesses can improve their brand reputation and stay ahead of the competition.

- Image collection & processing: In the world of modern social media data sets, images are scraped from different platforms. These images are then preprocessed, which includes tasks like object detection, recognition, visual analytics, and instantly removing backgrounds. Background removal is done to isolate the main subject of the image, which can be done to protect privacy or anonymity, or for other reasons.

- Target market segmentation: By analyzing social media data, businesses can identify their target markets and tailor their marketing campaigns accordingly. This can help businesses improve their ROI.

For everything there is to learn about this topic, check our web scraping guide.

2. Is Social Media Scraping Legal?

Many people consider social media scraping illegal or shady. But in reality, it’s a legitimate activity that needs to respect certain boundaries. The boundaries of web scraping legality depend on the data being scraped and how it is being done. Generally, web scraping is legal if the data is publicly available on the internet. But, a lot of caution is needed while web scraping and especially with personal data or copyrighted content.

To ensure ethical scraping, it’s essential to be considerate of the data being collected and its purpose. Personal data and intellectual property are areas that require special attention. Understanding regulations that dictate how to handle personal data like the GDPR and CCPA is paramount.

It is also critical to understand the Terms of Use on websites, as they can restrict scraping through browsewrap or clickwrap agreements, (but most of the time their enforceability depends on proper presentation to users).

| Interesting Fact! Recent rulings have clarified that scraping publicly available data is generally not a violation. The US appeals court reaffirms legality of web scraping in a landmark ruling [source: TechCrunch]. The Ninth Circuit found that scraping publicly accessible data on the internet does not violate the Computer Fraud and Abuse Act (CFAA). |

a. Is it legal to scrape data from Facebook?

In general, scraping publicly available data from Facebook is not illegal as long as it is done in compliance with applicable laws and regulations. You should always ensure that you are scraping publicly available data and not copyrighted content or personal data (as the former two are protected by the law).

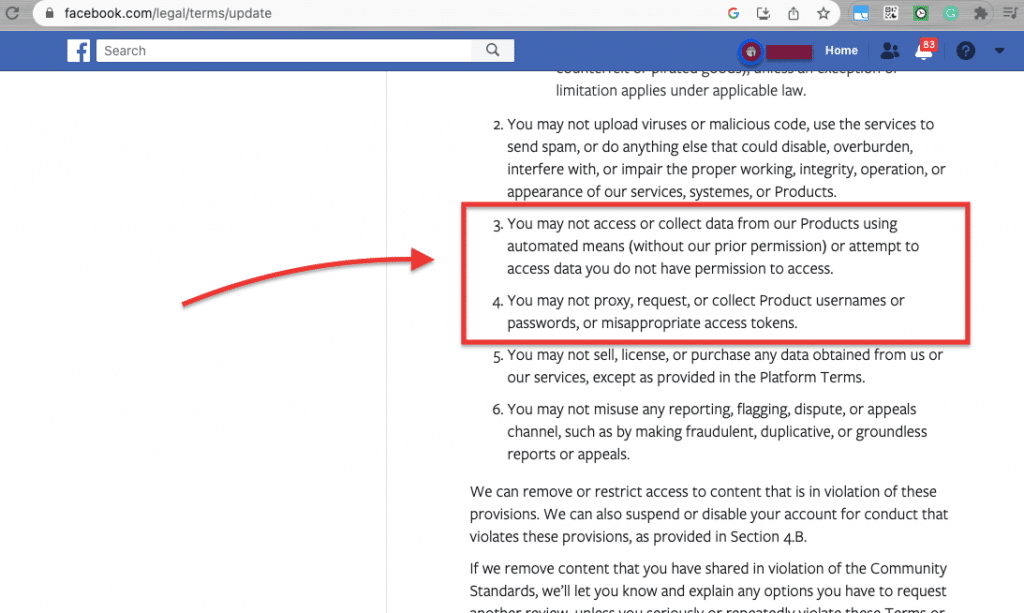

Facebook has strict policies against web scraping, which if done incorrectly, makes it a violation of its terms of service. Also, laws like GDPR ensure that personal data (from Facebook users) is protected by the law. For more information, you can read the up-to-date Facebook Terms of Service.

b. Is a Twitter scraper allowed to collect data from Twitter?

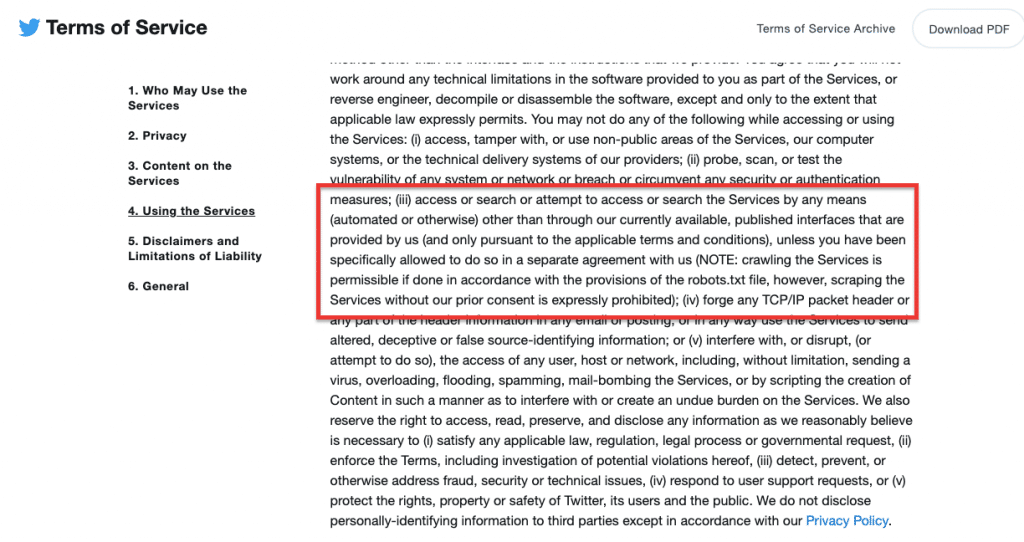

Scraping publicly accessible data from Twitter is not illegal. For example, scraping tweets from public profiles or public tweets that are visible to anyone on Twitter is considered legal. Always keep in mind not to scrape, collect or accumulate private data from Twitter (stick to publicly available data).

In addition, keep in mind that, if you collect copyrighted data publicly available (ensure not to re-use it without permission). Laws like the GDPR ensure that personal data (from Twitter users) is protected by the law. Always, stick to Twitter’s terms of service to ensure you are not breaking the law.

3. Accessing Twitter and Facebook Data Safely Via Their APIs.

Before moving forward with data scraping on Facebook and Twitter with web scraping tools, frameworks, or services, be aware that both Twitter and Facebook have official APIs. Both APIs are built to allow developers to “programmatically, automatically, and legally” access and retrieve Twitter data in a structured manner.

For instance, the Twitter API provides different endpoints for accessing tweets, user profiles, trends, and more. Also, Facebook’s Graph API enables developers to retrieve data and perform actions on behalf of users. Using both APIs ensures you are respecting users’ privacy and following their policies and terms of service.

To scrape data using the APIs, you need to follow these general steps:

To interact with Facebook or Twitter’s API, you’ll need a development environment with the necessary tools and libraries. Facebook provides official SDKs for several programming languages, including Python, PHP, JavaScript, and more (check Meta’s Developer Documentation). The Twitter API is language-agnostic and you can access it by using any programming language that can make HTTP requests and handle JSON responses.

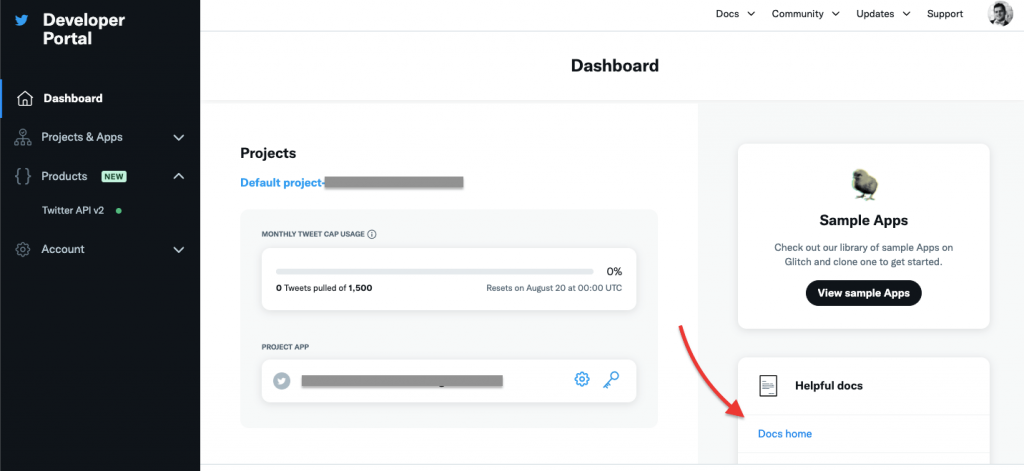

- Create Developer Accounts: Register as a developer on Facebook for their Graph API and on Twitter for their Developer API. This will give you access to the necessary API keys and credentials. Once you register, you’ll need to access the API credentials.

- Review API Documentation: Read and understand the API documentation provided by the social platforms. Familiarize yourself with the endpoints, parameters, and rate limits.

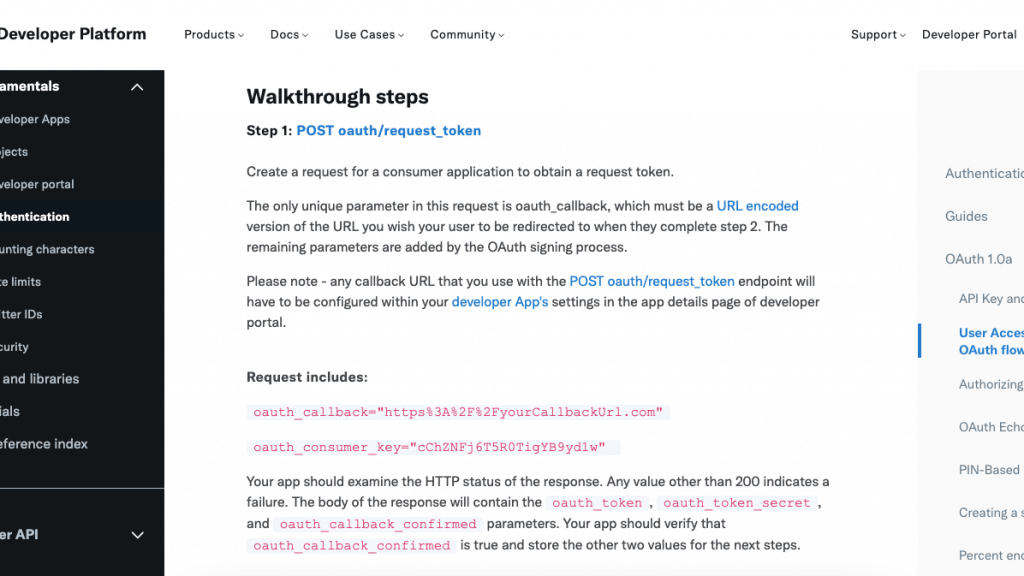

- Authenticate Your Requests: Most APIs require authentication to access certain data. Facebook and Twitter APIs use OAuth for authentication.

- Follow the authentication process outlined in the API documentation to obtain the necessary access tokens. The official documentation includes all the necessary steps to authenticate your requests.

- Start making API Requests: Use the appropriate API endpoints to make requests for the data you want to collect. For instance, Twitter’s API provides REST endpoints to access specific tweets or groups of tweets based on their IDs. These endpoints allow you to get up-to-date details, verify tweet availability, and view tweet edit history. The Tweet lookup endpoint, for instance, includes metadata for tweets and tracks the edit history using an array of tweet IDs. The API returns the most recent edit and any previous edits.

A third-party Twitter scraper vs using its API for data collection?

The Twitter API allows users to access data compliantly, but it has rate limits and may be less flexible. Scraping Twitter with other tools, on the other hand, turns out to be a lot more flexible and efficient. But using a Twitter scraper (if used incorrectly) may violate terms of service and raise legal concerns.

4. How to Scrape Facebook (or Twitter) with Python?

Python has extensive library support and a strong community; this makes it an excellent choice for web scraping tasks, regardless of the complexity or scale of the project. The programming language provides a rich selection of open-source libraries and frameworks explicitly designed for web scraping, including Scrapy, Beautiful Soup, and Selenium.

a. Breakdown of how to scrape using Python:

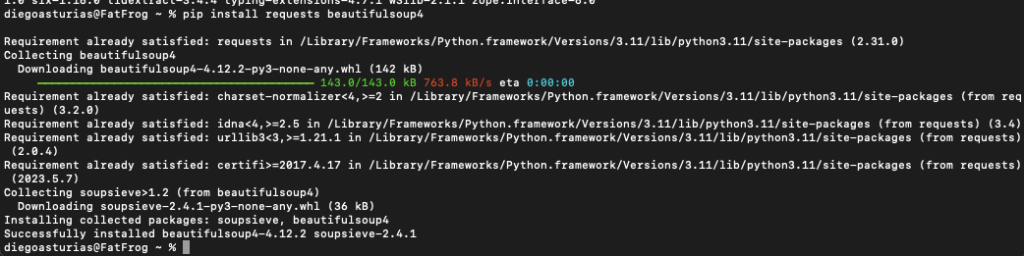

- Install the required libraries: Start by installing the specific library your project needs in your Python environment. To install a library, you can use pip, a package manager for Python. For example:

|

1 |

pip install requests beautifulsoup4 |

- Import the required libraries: Once the installation is complete, import the library into your Python code using the ‘import’ statement. For example:

|

1 2 |

import requests from bs4 import BeautifulSoup |

- Make a request: Send a request to the target website to fetch the desired information. Python offers various libraries, like “requests” to handle HTTP requests. To make requests to Twitter (or Facebook) you need to use the Twitter (or Facebook) API endpoints along with the appropriate authentication.

|

1 2 3 4 |

def get_user_tweets(username): base_url = f"https://twitter.com/{username}" # Make the request to Twitter response = requests.get(base_url) |

- Parse the HTML content: After obtaining the HTML content, parse it to extract relevant information. Python libraries like Beautiful Soup come with built-in HTML parsers, but you can also use third-party parsers like HTML5lib and lxml. For example:

|

1 2 |

# Parse the HTML content using Beautiful Soup soup = BeautifulSoup(response.text, "html.parser") |

- Locate the desired data: Python libraries offer methods to locate specific data on a web page. For instance, Beautiful Soup supports XPaths and CSS Selectors, making it easy to find and extract specific document elements. An example of locating information on a page:

|

1 2 |

# Locate the tweets on the page tweet_elements = soup.find_all("div", {"data-testid": "tweet"}) |

- Save the extracted data: Once you have scraped the required data, you can save it to a file or store it in a database for further analysis and use.

Note: Remember to explore the popular Python libraries to determine which one suits your needs. Always consider using official APIs whenever possible to access and collect data in an ethical and legal manner.

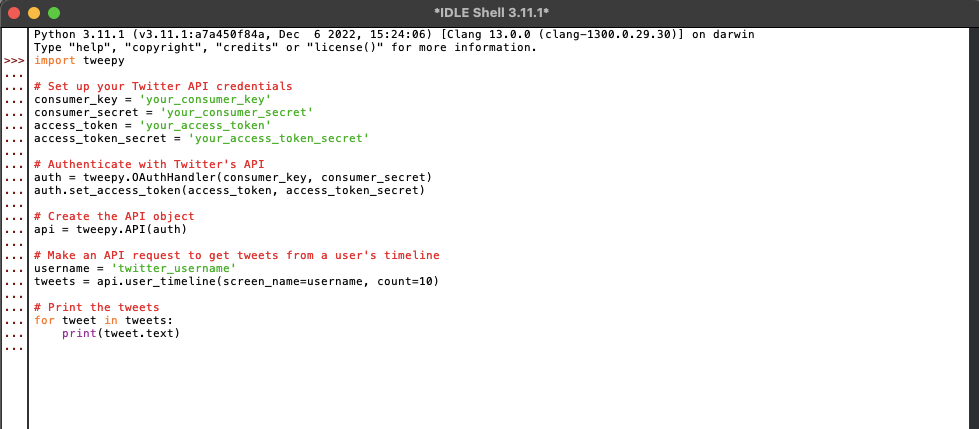

b. An example using Tweepy for making API requests?

For making API requests to Twitter’s API, you can use libraries like Tweepy in Python. Tweepy provides easy access to Twitter’s API. It abstracts the OAuth process and simplifies the interaction with Twitter’s API endpoints.

Here’s an example of how you can use ‘Tweepy’ to fetch tweets from Twitter’s API:

| import tweepy # Set up your Twitter API credentials consumer_key = ‘your_consumer_key’ consumer_secret = ‘your_consumer_secret’ access_token = ‘your_access_token’ access_token_secret = ‘your_access_token_secret’ # Authenticate with Twitter’s API auth = tweepy.OAuthHandler(consumer_key, consumer_secret) auth.set_access_token(access_token, access_token_secret) # Create the API object api = tweepy.API(auth) # Make an API request to get tweets from a user’s timeline username = ‘twitter_username’ tweets = api.user_timeline(screen_name=username, count=10) # Print the tweets for tweet in tweets: print(tweet.text) |

Applying the code.

The Python code provided above works as long as you have replaced ‘your_consumer_key’, ‘your_consumer_secret’, ‘your_access_token’, ‘your_access_token_secret’, and ‘twitter_username’ with your actual Twitter API credentials and the Twitter username you want to fetch tweets for.

In the next section, we will take a look at some of the best third-party web scraping tools to do the job (including no-code tools) when you cannot obtain data via APIs. In addition, If you are unfamiliar with Python and would like to try other programming languages specialized in statistical analysis and data visualization, we recommend you try web scraping with R.

5. The Top 4 Recommended Social Media Scraping Tools.

The following tools range from no-code scrapers to web scraping frameworks for extracting data and parsing HTML content. The top 4 scrapers listed here, enable the collection of data like posts, comments, and user profiles.

Note: Bear in mind that while web scraping Twitter and Facebook via these third-party services can be a viable option (if data cannot be obtained through APIs,) it is essential to consider its ethical and legal implications. When working with a website or service that offers an API, using the API is generally the recommended and preferred method to access and retrieve data. But with that being said, if you stick to the ToS and other rules like GDPR, and scrape publicly available data, you can do fine with these tools.

a. ParseHub

ParseHub is a powerful and free no-coding web scraping tool that allows you to literally turn any website into a spreadsheet or API. This tool comes with a user-friendly interface, where data extraction is as simple as clicking on the specific data elements you want to scrape.

ParseHub provides an efficient way to gather data from websites such as Twitter, Facebook, and other social platforms. It then converts it into a structured format for further analysis or integration into other applications. Learn more about ParseHub and how to use it, in our Parseub Review.

b. Octoparse

Octoparse is another no-code web scraping and data extraction tool. It offers a simple point-and-click interface, making it easy for users to extract data from various websites without any programming knowledge.

Octoparse can handle data extraction from any type of website and includes automatic IP rotation to prevent IP blocking. It can integrate with IPv6 proxies seamlessly to allow this IP rotation. Additionally, with Octoparse’s user-friendly interface, users can easily build web crawlers to scrape data efficiently and effectively.

c. Apify.

Apify offers web scraping, data extraction, and Robotic Process Automation (RPA) solutions. It serves as a one-stop solution for automating tasks that would otherwise be performed manually in a web browser.

With Apify, you can easily extract data from websites (including Facebook or Twitter), collect valuable information, and even automate repetitive processes efficiently. Apify provides a powerful set of tools to streamline tasks and enhance productivity.

d. Scrapy

Scrapy is an open-source and collaborative framework designed for web scraping and web crawling. It allows you to extract the necessary data from websites in a fast, simple, and highly extensible manner.

As a powerful tool, Scrapy allows developers and data analysts to efficiently gather information from various websites. Such capabilities make Scrapy valuable for tasks such as data mining, research, and data-driven applications.

6. Best practices for Twitter and Facebook Scraping.

In this section, we will go through the best ethical, legal, and technical practices for Facebook and Twitter scraping. Use this collection of best practices as a kind of checklist. Remember, although web scraping is not illegal, how you do it and what you scrape data, can make all the difference.

a. Comply with the site’s TOS and with other laws and regulations.

Ensure that your data scraping activities adhere to Twitter’s or Facebook’s Terms of Service (ToS). Always avoid scraping data without permission (as it may be unethical and illegal). Also respect data protection laws and regulations, such as the GDPR or CCPA, when collecting data from these social platforms.

b. Use the official APIs.

Using APIs ensure that you are collecting data in a way that is authorized by Facebook or Twitter. With APIs, you are complying with their terms of service. The APIs may have rate limits and usage restrictions, so make sure to review the API documentation and stick to the usage guidelines. If your web scraping project can’t get data from such APIs, ensure you are complying with the site ToS and with other laws.

c. Respect Rate Limits.

If you are using Facebook or Twitter APIs or making requests to their servers via other means (web scraping services, bots), respect the rate limits imposed by both social media platforms. Exceeding the rate limits suggested by these sites can make your API access temporarily or permanently suspended. If you are not using APIs, but still bypassing these limitations, the social media platform will use its rate-limiting mechanisms to stop your incoming network traffic.

d. Respect Robots.txt.

Pay attention to the website’s “robots.txt” file. This file provides instructions to web crawlers (or automated agents) about how they should access and interact with the website’s content. If a website asks crawlers not to access certain pages, then don’t access (or scrape) those pages.

e. Use IPv6 proxies.

When scraping data from Twitter or Facebook (through any method), it is always recommended to use proxies (IPv6 Proxies). Proxies can help prevent IP blocking, keep anonymity and privacy, bypass geographical restrictions, scrape at scale, and overall avoid any suspicion. With Proxies, you can easily bypass rate limitations (intentionally or unintentionally), so it is always advisable to regulate your proxy’s generated traffic to not bypass those restrictions.

f. Process scraped data.

After scraping, process the text data to analyze it further. You can use Natural Language Processing (NLP) tools or manually process the data using programming languages like Python.

g. Authenticate Properly.

If the data you want to access requires user authentication (for example, private user data), ensure that you have proper user consent and follow Facebook’s and Twitter’s authentication guidelines. Avoid accessing private or sensitive data without explicit permission from the users.

h. Do not scrape unauthorized data or sensitive information.

Avoid scraping data from private groups, pages, or profiles without proper authorization. Only collect data from public sources or groups where the data is intended to be publicly accessible. For instance, when scraping Twitter, you can typically collect various fields such as content, date, favorite count, handle, hashtag, name, replies, retweets, and URL. When scraping Facebook, you can collect relevant data fields such as pages, ads, events, profiles, hashtags, and posts. Always, respect the privacy of users and do not scrape sensitive information.

7. Final Words.

Twitter and Facebook, being the two most popular social platforms, hold a treasure trove of data that can significantly impact business strategies, research, and marketing efforts.

However, it is essential to approach scraping with the utmost respect for user privacy, the platform’s terms of service, and any other applicable law. Leveraging the power of APIs and following best practices will not only enhance the efficiency and accuracy of your data collection but will also ensure ethical and responsible scraping practices.

Remember, with great power comes great responsibility, so use these scraping techniques wisely and responsibly.

Happy scraping!

0Comments